Table of contents

Bart Jacobs wrote a wonderful article From Hex to UIColor and Back in Swift and my article builds on top of his work. I was wondering how the same can be achieved in SwiftUI with its Color struct.

From Hex to Color in SwiftUI

You don't need UIKit for this. Create an extension, using a Foundation string parser to scan for a long long value from the hexadecimal representation and finally initializing Color with help of the underlying CGColor.

import SwiftUI

extension Color {

init?(hex: String) {

var hexSanitized = hex.trimmingCharacters(in: .whitespacesAndNewlines)

hexSanitized = hexSanitized.replacingOccurrences(of: "#", with: "")

var rgb: UInt64 = 0

var r: CGFloat = 0.0

var g: CGFloat = 0.0

var b: CGFloat = 0.0

var a: CGFloat = 1.0

let length = hexSanitized.count

guard Scanner(string: hexSanitized).scanHexInt64(&rgb) else { return nil }

if length == 6 {

r = CGFloat((rgb & 0xFF0000) >> 16) / 255.0

g = CGFloat((rgb & 0x00FF00) >> 8) / 255.0

b = CGFloat(rgb & 0x0000FF) / 255.0

} else if length == 8 {

r = CGFloat((rgb & 0xFF000000) >> 24) / 255.0

g = CGFloat((rgb & 0x00FF0000) >> 16) / 255.0

b = CGFloat((rgb & 0x0000FF00) >> 8) / 255.0

a = CGFloat(rgb & 0x000000FF) / 255.0

} else {

return nil

}

self.init(red: r, green: g, blue: b, opacity: a)

}

}

Two differences to Barts article:

- Here I use

UInt64andscanHexInt32becausescanHexInt32was deprecated in iOS 13 - Here I use the

Colorinitializer and pass alpha as opacity (there is noalphaparameter inColor's initializer). I can avoid using UIKit :)

Remark: It is also possible to initialize Color with a Core Graphics representation. Be aware that you have to use init(srgbRed:green:blue:alpha:) and not init(red:green:blue:alpha:) because iOS uses sRGB color space.

From Color to Hex in SwiftUI

According to Apple's documentation I was hoping to leverage the Core Graphics representation of the color directly from Color with its optional instance property cgColor.

You can get a CGColor instance from a constant SwiftUI color. This includes colors you create from a Core Graphics color, from RGB or HSB components, or from constant UIKit and AppKit colors. For a dynamic color, like one you load from an Asset Catalog using init(_:bundle:), or one you create from a dynamic UIKit or AppKit color, this property is nil.

However, in my tests the property was always nil. Even for constant SwiftUI colors like Color.blue.

UIKit is needed to ensure getting the underlying Core Graphics representation. Once we created UIColor from Color then accessing the Core Graphics representations and the color components is easy. Please read Bart's article if you wanna know more about the String formatters used here.

import SwiftUI

import UIKit

extension Color {

func toHex() -> String? {

let uic = UIColor(self)

guard let components = uic.cgColor.components, components.count >= 3 else {

return nil

}

let r = Float(components[0])

let g = Float(components[1])

let b = Float(components[2])

var a = Float(1.0)

if components.count >= 4 {

a = Float(components[3])

}

if a != Float(1.0) {

return String(format: "%02lX%02lX%02lX%02lX", lroundf(r * 255), lroundf(g * 255), lroundf(b * 255), lroundf(a * 255))

} else {

return String(format: "%02lX%02lX%02lX", lroundf(r * 255), lroundf(g * 255), lroundf(b * 255))

}

}

}

Summary

It is possible, without UIKit, to create a Color instance from a hex string. But you need UIKit as bridging mechanism to create a hex string from a Color instance.

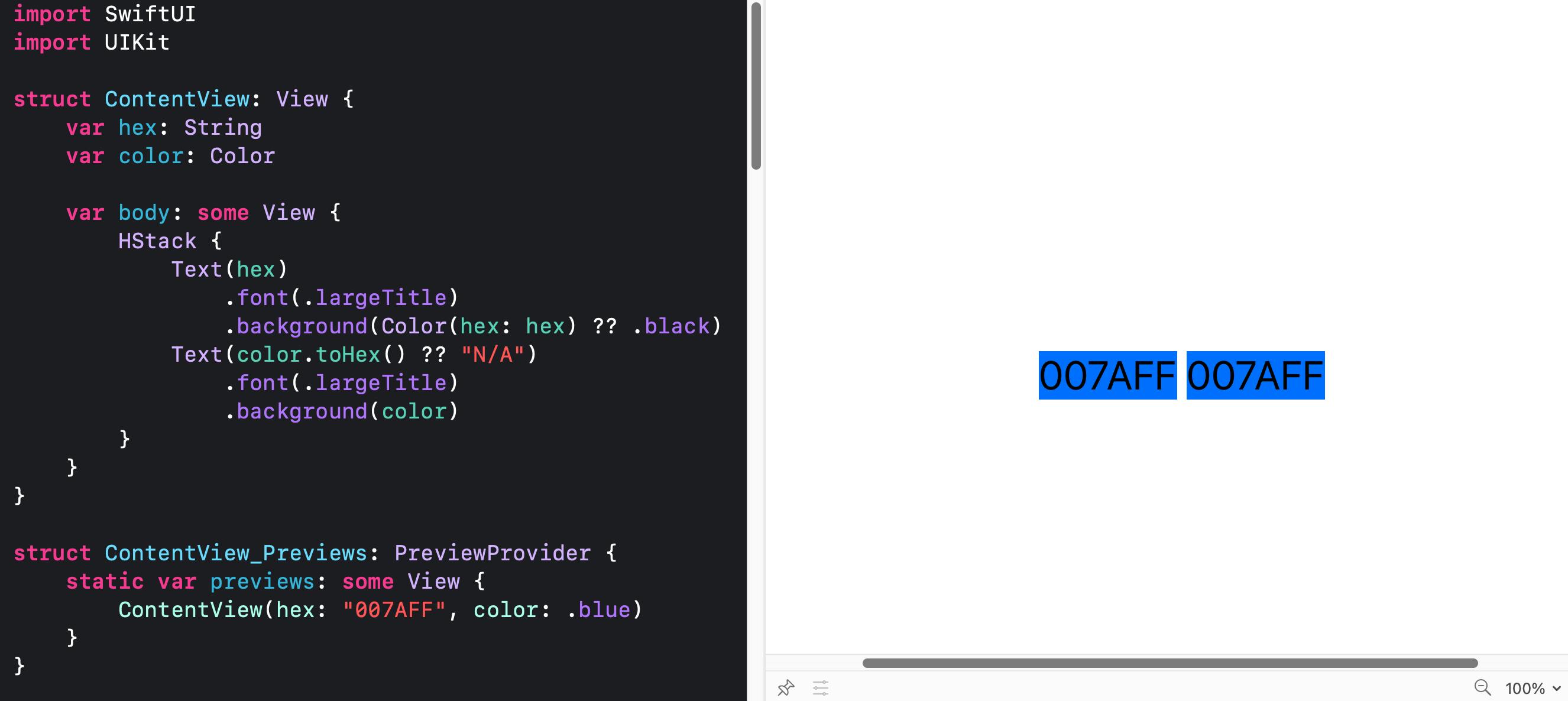

The two extensions work nicely and produce the same visual color as demonstrated below.