PlantUML Text Encoding in Swift

TIL how to use Compression framework (to deflate) and to implement a base64 algorithm (with custom mapping table) in Swift

This blog post describes how to implement PlantUML Text Encoding in Swift.

Technically it showcases

- Compressing Data with Buffer Compression

- Swift implementation of Base64 algorithm

The code is part of my project SwiftPlantUML

Specification

According to PlantUML Text Encoding the text diagram need to be

- Encoded in UTF-8

- Compressed using Deflate (or Brotli) algorithm

- Reencoded in ASCII using a transformation close to base64

Implementation

I created a value type PlantUMLText conforming to RawRepresentable. This synthesizes an initializer accepting the raw value. The encoded text shall be accessible through a computed property.

import Foundation

struct PlantUMLText: RawRepresentable {

var rawValue: String

var encodedValue: String {

// TODO: (1) deflate and (2) encode

}

}

Encoding a string in UTF-8 is pretty simple in Swift so I don't list this as an explicit step. Let's focus on

- deflate

- reencode in ASCII with base64 algorithm but a different mapping array.

Deflate

The Compression framework from Apple implements the zlib encoder (at level 5 only). The encoded format is the raw DEFLATE format as described in IETF RFC 1951.

Note to myself: do not forget to import the framework.

import Compression

Then I created the convenience function deflate as an extension of PlantUMLText.

The implementation is from Apple's article about Compressing and Decompressing Data with Buffer Compression.

private extension PlantUMLText {

/**

deflates according to IETF RFC 1951

https://developer.apple.com/documentation/accelerate/compressing_and_decompressing_data_with_buffer_compression

- Parameter text: diagram textual description ("@startuml ... @enduml")

- Returns: compressed data according to DEFLATE (IETF RFC 1951) a.k.a zlib compression algorithm

*/

func deflate(_ text: String) -> NSData {

let sourceString = text

let sourceBuffer = Array(sourceString.utf8)

let destinationBuffer = UnsafeMutablePointer<UInt8>.allocate(capacity: sourceString.count)

let algorithm = COMPRESSION_ZLIB

let compressedSize = compression_encode_buffer(destinationBuffer, sourceString.count,

sourceBuffer, sourceString.count,

nil,

algorithm)

return NSData(bytesNoCopy: destinationBuffer,

length: compressedSize)

}

}

Base 64 algorithm

I also created a convenience API to encode the compressed data.

// implementation based on SwiftyBase64 (Created by Doug Richardson)

internal extension PlantUMLText {

/**

Encode a [UInt8] byte array using Base64 algorithm (as decribed by RFC 4648 section 4) **BUT uses a different translation table / alphabet**.

For PlantUML, the mapping array for values 0-63 is:

`0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz-_`

See https://plantuml.com/en/text-encoding for more info

- Parameter compressedData: of a deflated string

- Returns: encoded string

*/

func base64plantuml(_ compressedData: NSData) -> String {

// TODO

}

}

The implementation is more tricky. It follows the bae64 algorithm but a different mapping table is used. What does this mean?

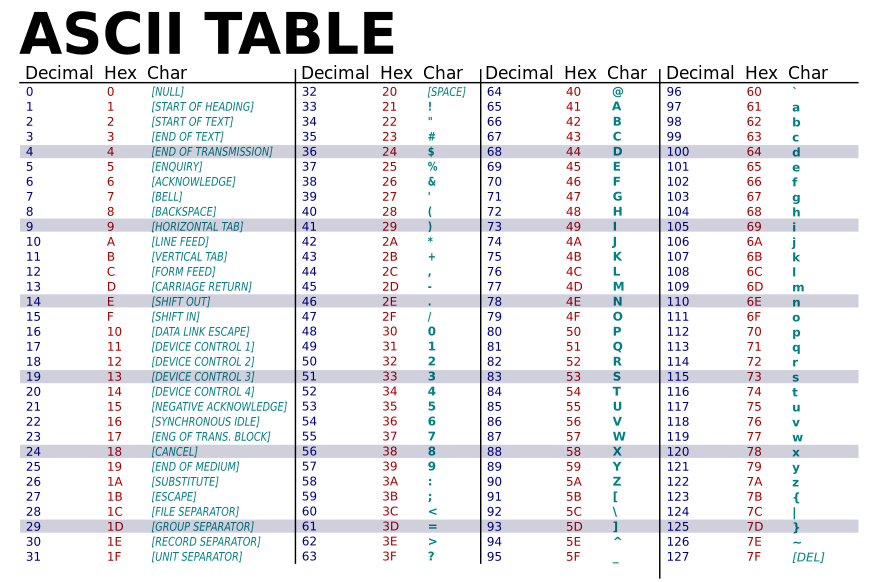

Base64 encodes binary data according to the ASCII standard and represents them through 64 characters. The standard alphabet comprises 10 digits, 26 lowercase characters, 26 uppercase characters, the Plus sign (+) and the Forward Slash (/). There is also a 65th character known as a pad, the Equal sign (=).

So you can look up a character based on a decimal value. For example, with the decimal value 45, you can find the Minus sign (-).

For the standard alphabet the mapping array for values 0-63 is:

ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/

For PlantUML, the mapping array for values 0-63 is:

0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz-_

Let's built up the mapping array from 8-bit unsigned integers with 64 entries.

let encodingAlphabetTable: [UInt8] = [

48, // 0=0

49, // 1=1

50, // 2=2

51, // 3=3

52, // 4=4

53, // 5=5

54, // 6=6

55, // 7=7

56, // 8=8

57, // 9=9

65, // 10=A

66, // 11=B

67, // 12=C

68, // 13=D

69, // 14=E

70, // 15=F

71, // 16=G

72, // 17=H

73, // 18=I

74, // 19=J

75, // 20=K

76, // 21=L

77, // 22=M

78, // 23=N

79, // 24=O

80, // 25=P

81, // 26=Q

82, // 27=R

83, // 28=S

84, // 29=T

85, // 30=U

86, // 31=V

87, // 32=W

88, // 33=X

89, // 34=Y

90, // 35=Z

97, // 36=a

98, // 37=b

99, // 38=c

100, // 39=d

101, // 40=e

102, // 41=f

103, // 42=g

104, // 43=h

105, // 44=i

106, // 45=j

107, // 46=k

108, // 47=l

109, // 48=m

110, // 49=n

111, // 50=o

112, // 51=p

113, // 52=q

114, // 53=r

115, // 54=s

116, // 55=t

117, // 56=u

118, // 57=v

119, // 58=w

120, // 59=x

121, // 60=y

122, // 61=z

45, // 62=-

95, // 63=_

// PADDING FOLLOWS, not used during lookups

61, // 64==

]

Now that we have the mapping array let's go over the implementation. I took it from Doug Richardson and his project SwiftyBase64.

let bytes = [UInt8](compressedData)

var encodedBytes: [UInt8] = []

let padding = encodingAlphabetTable[64]

var i = 0

let count = bytes.count

while i + 3 <= count {

let one = bytes[i] >> 2

let two = ((bytes[i] & 0b11) << 4) | ((bytes[i + 1] & 0b1111_0000) >> 4)

let three = ((bytes[i + 1] & 0b0000_1111) << 2) | ((bytes[i + 2] & 0b1100_0000) >> 6)

let four = bytes[i + 2] & 0b0011_1111

encodedBytes.append(encodingAlphabetTable[Int(one)])

encodedBytes.append(encodingAlphabetTable[Int(two)])

encodedBytes.append(encodingAlphabetTable[Int(three)])

encodedBytes.append(encodingAlphabetTable[Int(four)])

i += 3

}

if i + 2 == count {

// (3) The final quantum of encoding input is exactly 16 bits; here, the

// final unit of encoded output will be three characters followed by

// one "=" padding character.

let one = bytes[i] >> 2

let two = ((bytes[i] & 0b11) << 4) | ((bytes[i + 1] & 0b1111_0000) >> 4)

let three = ((bytes[i + 1] & 0b0000_1111) << 2)

encodedBytes.append(encodingAlphabetTable[Int(one)])

encodedBytes.append(encodingAlphabetTable[Int(two)])

encodedBytes.append(encodingAlphabetTable[Int(three)])

encodedBytes.append(padding)

} else if i + 1 == count {

// (2) The final quantum of encoding input is exactly 8 bits; here, the

// final unit of encoded output will be two characters followed by

// two "=" padding characters.

let one = bytes[i] >> 2

let two = ((bytes[i] & 0b11) << 4)

encodedBytes.append(encodingAlphabetTable[Int(one)])

encodedBytes.append(encodingAlphabetTable[Int(two)])

encodedBytes.append(padding)

encodedBytes.append(padding)

} else {

// (1) The final quantum of encoding input is an integral multiple of 24

// bits; here, the final unit of encoded output will be an integral

// multiple of 4 characters with no "=" padding.

assert(i == count)

}

return String(decoding: encodedBytes, as: Unicode.UTF8.self)

If you struggle to understand the implementation, then you are not alone :)

It helps to read up on the Base64 encoding specification.

The encoding process represents 24-bit groups of input bits as output strings of 4 encoded characters. Proceeding from left to right, a 24-bit input group is formed by concatenating 3 8-bit input groups. These 24 bits are then treated as 4 concatenated 6-bit groups, each of which is translated into a single character in the base 64 alphabet.

Each 6-bit group is used as an index into an array of 64 printable characters.

That's the job of the while loop.

while i + 3 <= count {

let one = bytes[i] >> 2

let two = ((bytes[i] & 0b11) << 4) | ((bytes[i + 1] & 0b1111_0000) >> 4)

let three = ((bytes[i + 1] & 0b0000_1111) << 2) | ((bytes[i + 2] & 0b1100_0000) >> 6)

let four = bytes[i + 2] & 0b0011_1111

encodedBytes.append(encodingAlphabetTable[Int(one)])

encodedBytes.append(encodingAlphabetTable[Int(two)])

encodedBytes.append(encodingAlphabetTable[Int(three)])

encodedBytes.append(encodingAlphabetTable[Int(four)])

i += 3

}

Bitwise operators are used to do the transformation. I highly recommend to read Computer science in JavaScript: Base64 encoding which explains super well how bitwise operators can be used for base64 encoding and you don't have to know JavaScript to understand it.

Special processing is performed if fewer than 24 bits are available at the end of the data being encoded. A full encoding quantum is always completed at the end of a quantity. When fewer than 24 input bits are available in an input group, bits with value zero are added (on the right) to form an integral number of 6-bit groups. Padding at the end of the data is performed using the '=' character.

That's handled after the while loop.

if i + 2 == count {

// (3) The final quantum of encoding input is exactly 16 bits; here, the

// final unit of encoded output will be three characters followed by

// one "=" padding character.

let one = bytes[i] >> 2

let two = ((bytes[i] & 0b11) << 4) | ((bytes[i + 1] & 0b1111_0000) >> 4)

let three = ((bytes[i + 1] & 0b0000_1111) << 2)

encodedBytes.append(encodingAlphabetTable[Int(one)])

encodedBytes.append(encodingAlphabetTable[Int(two)])

encodedBytes.append(encodingAlphabetTable[Int(three)])

encodedBytes.append(padding)

} else if i + 1 == count {

// (2) The final quantum of encoding input is exactly 8 bits; here, the

// final unit of encoded output will be two characters followed by

// two "=" padding characters.

let one = bytes[i] >> 2

let two = ((bytes[i] & 0b11) << 4)

encodedBytes.append(encodingAlphabetTable[Int(one)])

encodedBytes.append(encodingAlphabetTable[Int(two)])

encodedBytes.append(padding)

encodedBytes.append(padding)

} else {

// (1) The final quantum of encoding input is an integral multiple of 24

// bits; here, the final unit of encoded output will be an integral

// multiple of 4 characters with no "=" padding.

assert(i == count)

}

As the final step, let's assemble the encoded string.

Each 6-bit group is used as an index into an array of 64 printable characters. The character referenced by the index is placed in the output string.

return String(decoding: encodedBytes, as: Unicode.UTF8.self)

Complete source can be found on GitHub.